Introduction

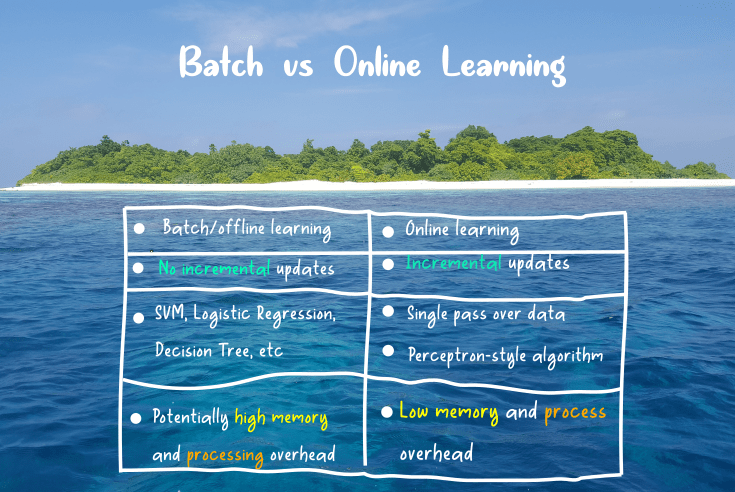

Strategies for machine learning system are classified into two main categories. They are Batch Learning and Online learning. In batch learning, models learn offline while in online learning data flow into the learning algorithm in stream of pipelines. In this article, you will learn:

- Gentle introduction of batch learning.

- Problems in batch learning.

- Solving batch learning problems using online learning method.

So let’s begin…

What is Batch Learning?

Data preprocessing is an important step in machine learning projects. It includes various activities such as data cleaning, data reduction, splitting dataset (training and testing dataset) and data normalization process. To train a well accurate model, a large set of data is required. In batch learning process we use all the data we have for the training process. Therefore, the training process takes time and requires huge computational power.

What is happening under the hood?

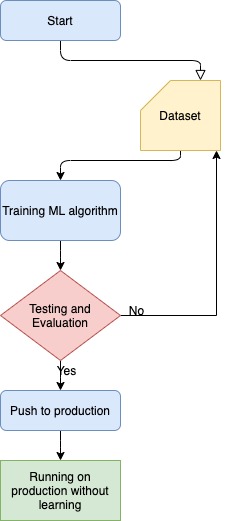

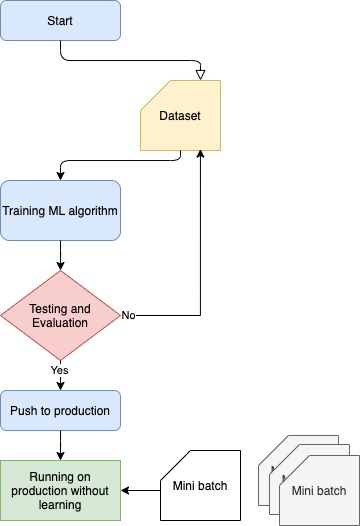

After model is fully trained in the development process it will be deployed into the production. Once model is deployed, it will use only the data that we have given to train it. We cannot feed new data directly then let it learn on the fly.

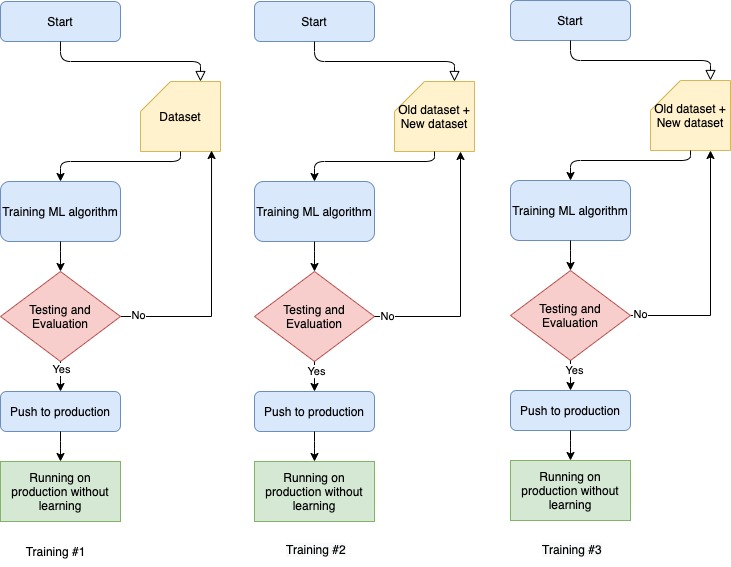

If we want to use new data then we need to start from the scratch. We need to bring down the machine learning model and use new dataset with old data and then train it again. When model trained completely on the new dataset, we then deploy it again to the production.

This is not a complex process perhaps in most of the cases, it might work without any major issues.

If we want to run the machine learning model, in every 24hours or in every week, then training the model from the beginning will be very much time consuming and also expensive. Training a machine learning model with new and old dataset not only requires time and computational power, but also requires large disk space and disk management which may again cost money.

This is fine for small projects but it gets tough in real time where the data is coming from various end points such as IoT devices, computers and from servers.

| Training # | Dataset | Diskspace (TB) |

| 1 | 1,000,000 | 100 |

| 2 | 2,000,000 | 200 |

| 3 | 3,000,000 | 300 |

Disadvantages of batch learning

The negative effects of large batch sizes are:

- Model will not learn in the production. We need to train the model every time with new data.

- Disk management is costly. As dataset grows then it requires more disk spaces.

- To train a model with large amount of data set costs time and computational resources.

Online learning

To solve issues we face on batch learning, we use a method called online learning. In online learning, it tends to learn from new data while model is in production. Small batches of data will be used to train the model which are known as mini batches. We will look more into online learning in another article.

Conclusion

In this article we have looked into batch learning strategy and how it works. W’ve highlighted the disadvantages of batch learning and how online learning is used to overcome issues we face in batch learning. Hope you understand something on batch from this article.