Had opportunity to stay one of the finest water villa resorts in Maldives, Gili Lankanfushi (No News No Shoes) for free, Yes for free!

So why not learn something in here. Thanks to Gili team and management.

Let’s begin….

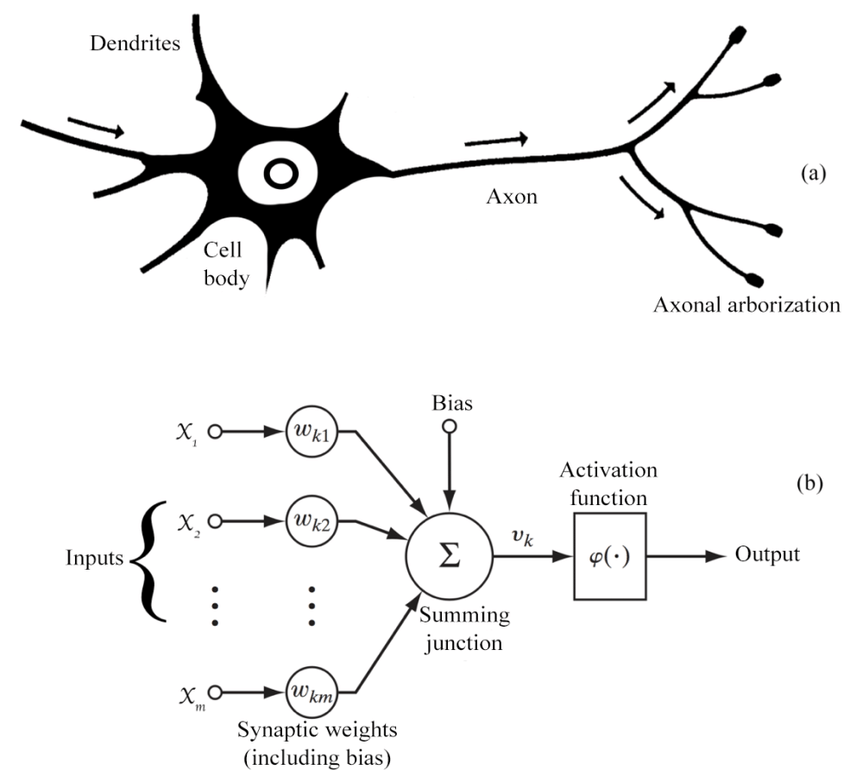

Artificial neurons are digital construct that seeks to simulate the similar behavior of a biological neuron in human brain. Large number of artificial neurons are digitally connected to each other to make up an artificial neural network. Therefore the core fundamental building block of any neural network is artificial neurons.

Artificial Neuron

Artificial neuron is a mathematical model which mimic biological neuron. Each neuron receives one or more inputs and combines them using an activation function to produce an output.

Weights and Biases

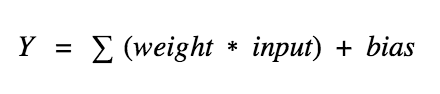

Weights and biases are the learnable parameters of a machine learning models. When the inputs are transmitted between each neuron, the weights are applied to the inputs along with the bias.

Weights control strength of the connections between two neurons. It decides how much influence the input will have on the output.

Biases are constant values. Bias units are not influenced by the previous layer but they do have outgoing connections with their own weights.

How Neural Networks Work

At very high level a simple neural network consists input layers, output layers and many hidden layers in between. These layers are connected via series of nodes so they form a complex giant network.

Within each node there are weight and a bias values. As an input enters the node, it gets processed by the value of a weight and bias and then output the result which then passed to the next layer in the neural network. This way, it forms a signals which transmit form one layer to another until it reaches to last output layer.

This complex underlying structures give powers to computers to “think like humans” and produce more sophisticated cognitive results.

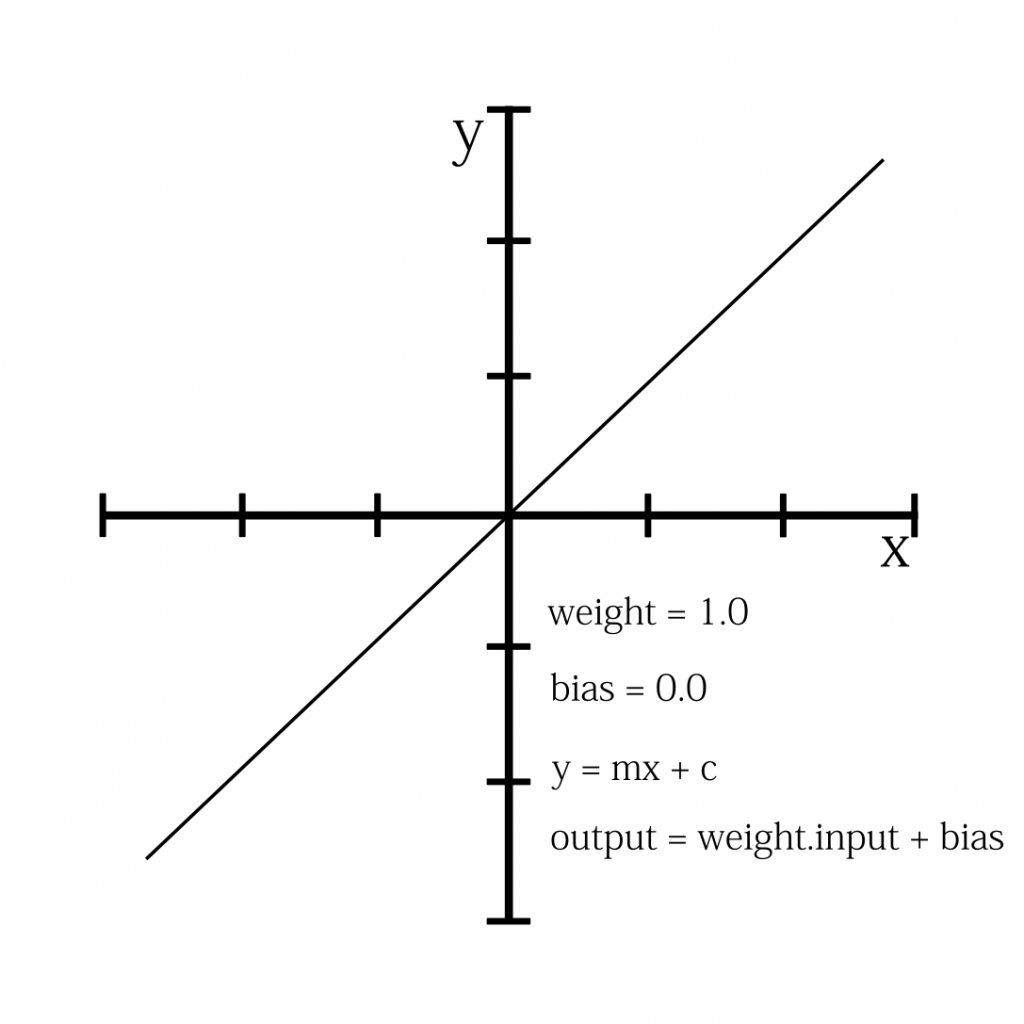

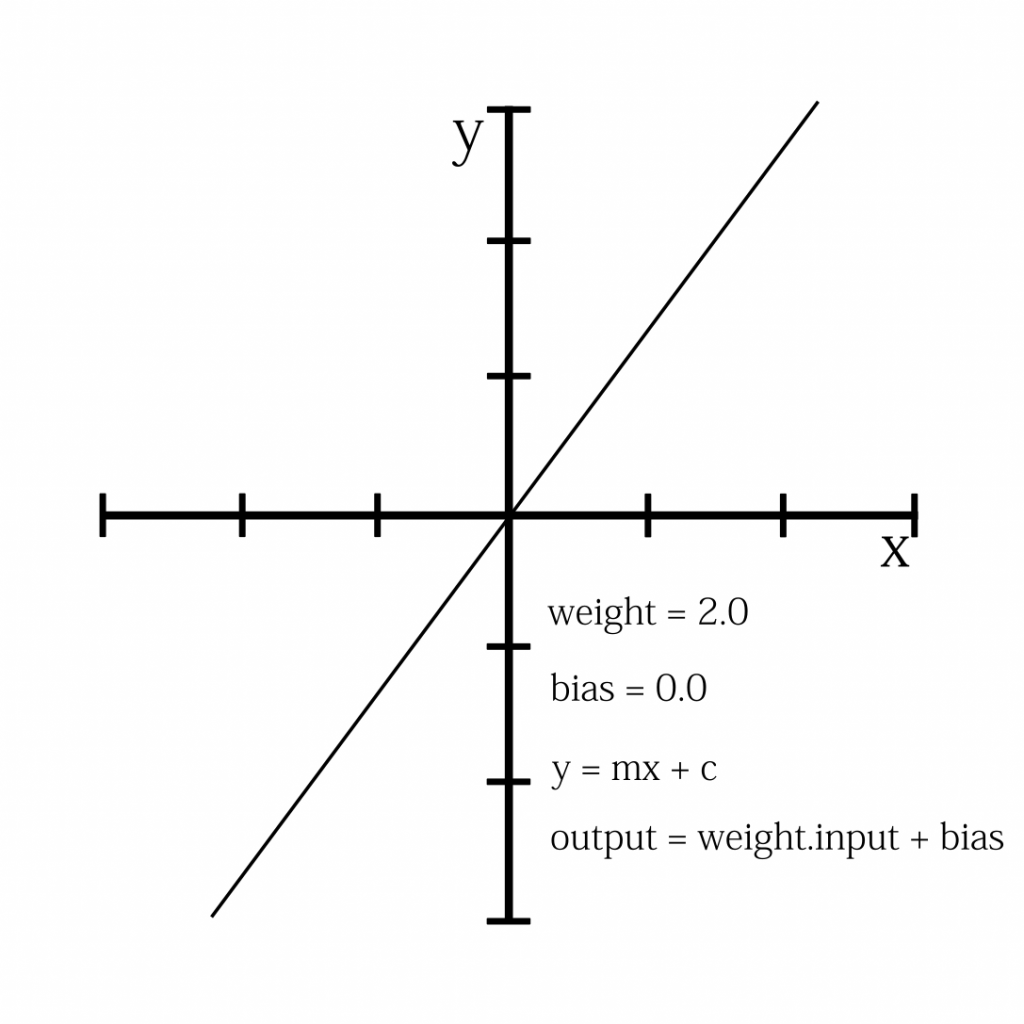

So let’s begin with single input neuron’s output with a weight of 1, bias of 0 and input x.

In the second example we will adjust the weight keeping bias unchanged and see how the slop of the function change.

As you see above, if we increase the value of the weight, the slop will get steeper. However, if we reduce weight of one neuron then the slop will decreases.

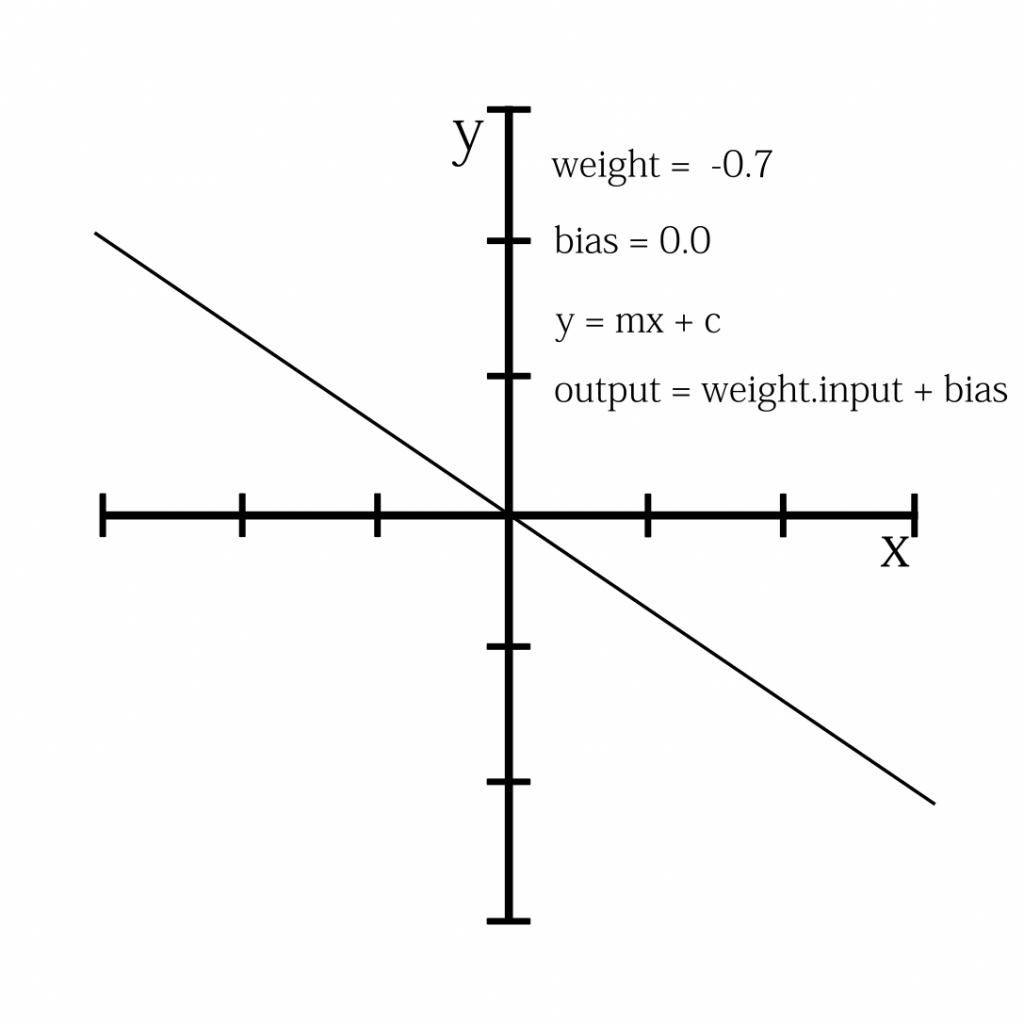

Now, what if we negate the weight. Obviously the slope turns to a negative.

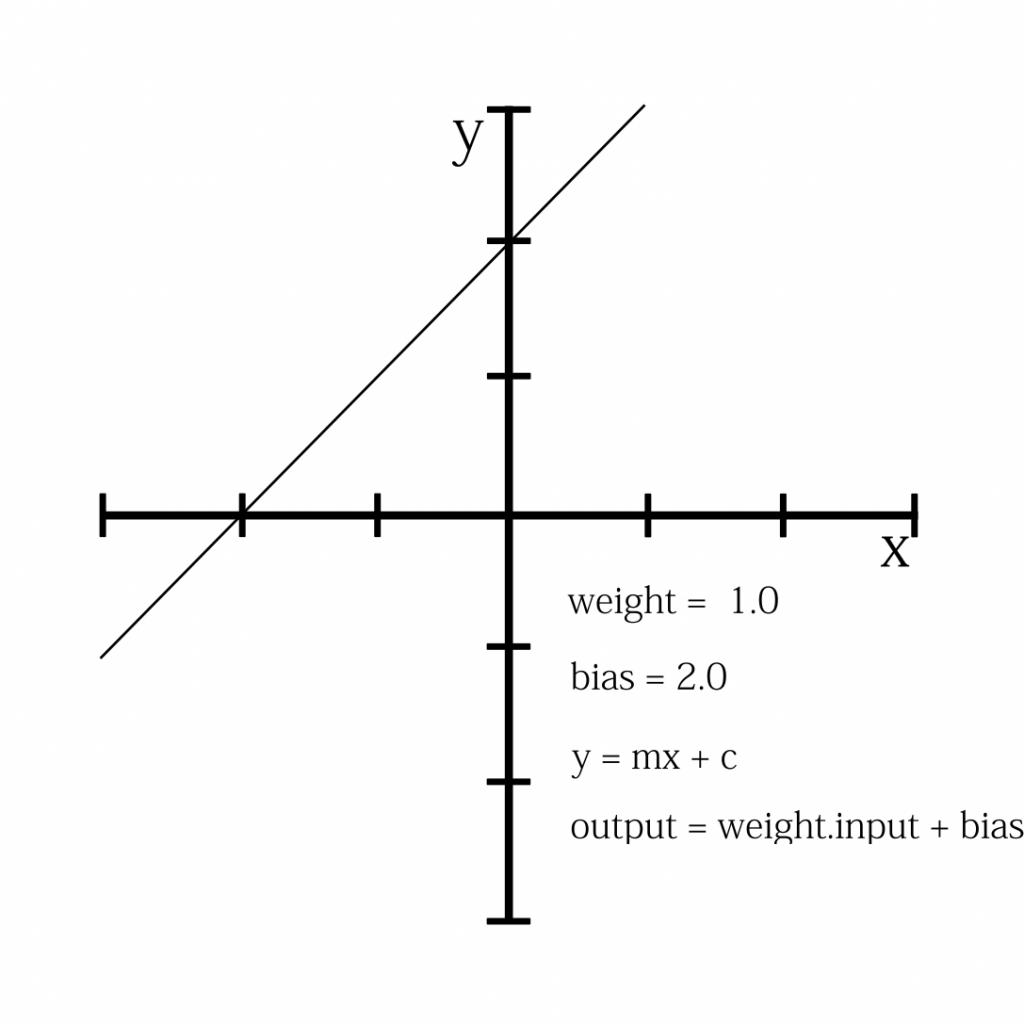

As mentioned earlier, these graphs visualize how weight causes the output value of a single neuron. Now let’s change a little bit. This time we will keep weight at 1.0 and give different bias values. Let’s start with a weight of 1.0 and a bias of 2.0.

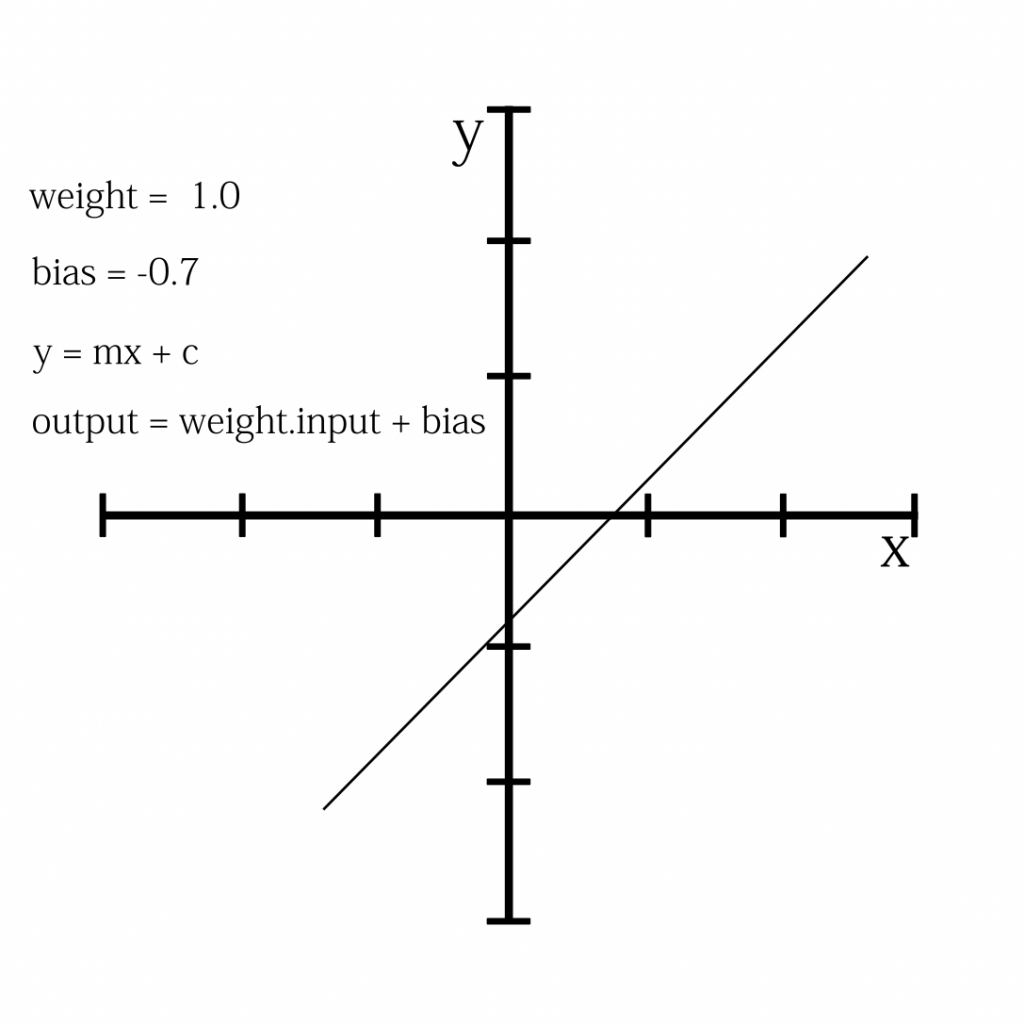

As we increase bias, the function output shifts upward. If we decrease the bias, then the overall function output will move downward as shown below.

Now we have learnt something about artificial neurons. Artificial neurons mimic how human brain works. These complex neurons require weight and bias value to output some result.